About

Interests

News

- [Pinned] Honored to be selected for Ant Group's Top AI Talent Program — ANT Star (with an annual salary exceeding RMB one million).

- Two paper on adversarial training and unlearning accepted to ICASSP 2026.

- Three papers accepted to NeurIPS 2025, with one selected as a Spotlight paper.

- One paper on adversarial examples for segmentation models accepted to TMM 2025.

- One paper on backdoor attacks accepted to TIFS 2025.

- One paper on backdoor detection has been accepted to the CVPR 2025.

- Proud to lead my team to win the National Championship in the 1st China Adversarial Algorithm Challenge.

- One paper on jailbreak attacks in the physical-world for embodied intelligence accepted to the ICLR 2025.

- Three paper on adversarial examples for object detectors, unlearnable example detection, and physical-world adversarial attacks accepted to AAAI 2025, with one selected for Oral presentation.

- Two papers on adversarial examples for SAM and unlearnable examples accepted to NeurIPS 2024.

- One paper on physical-world backdoor attacks against object detection accepted to the IJCAI 2024.

- One paper on adversarial training accepted to the IEEE S&P 2024 (BIG4).

- One paper on multimodal adversarial examples accepted to ACM Multimedia 2023.

- One paper on adversarial examples accepted to the ICCV 2023.

Publications ( / )

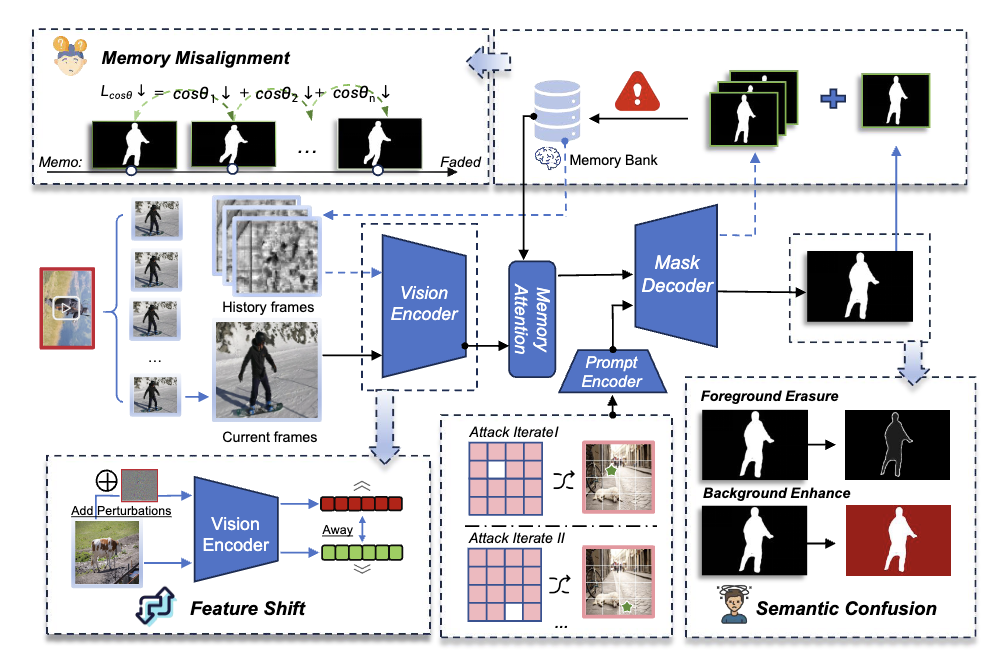

Vanish into Thin Air: Cross-prompt Universal Adversarial Attacks for SAM2

NeurIPS 2025 (Spotlight)

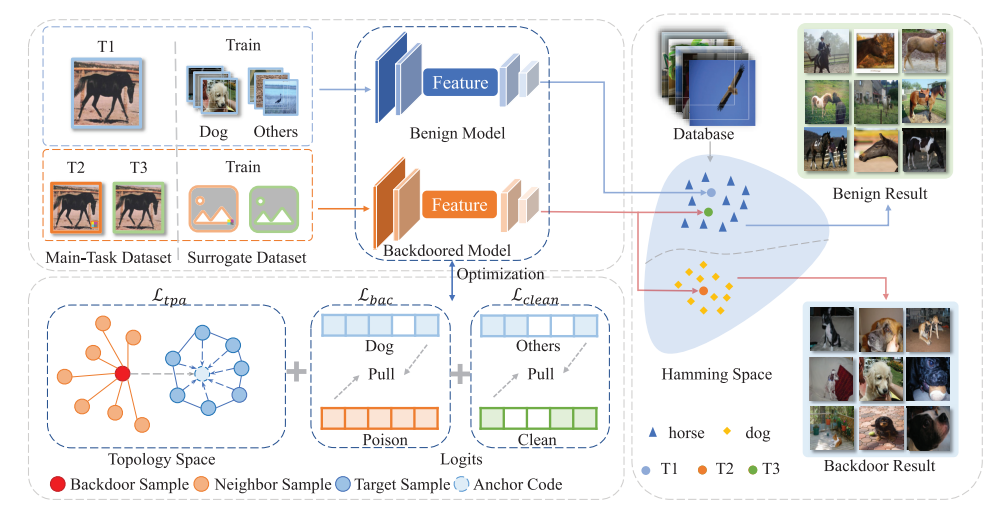

DarkHash: A Data-Free Backdoor Attack Against Deep Hashing

TIFS 2025

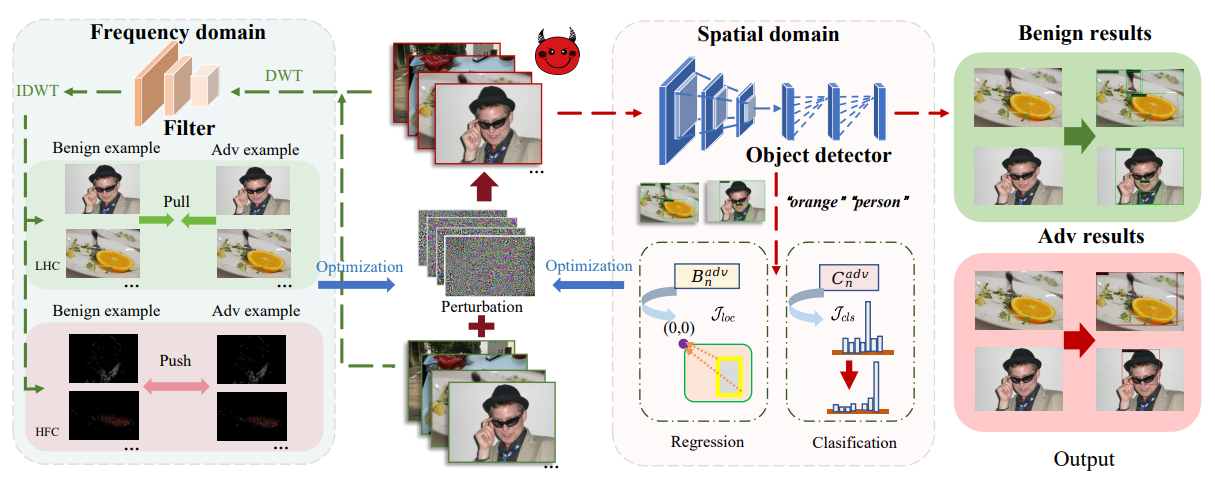

NumbOD: A Spatial-Frequency Fusion Attack Against Object Detectors

AAAI 2025

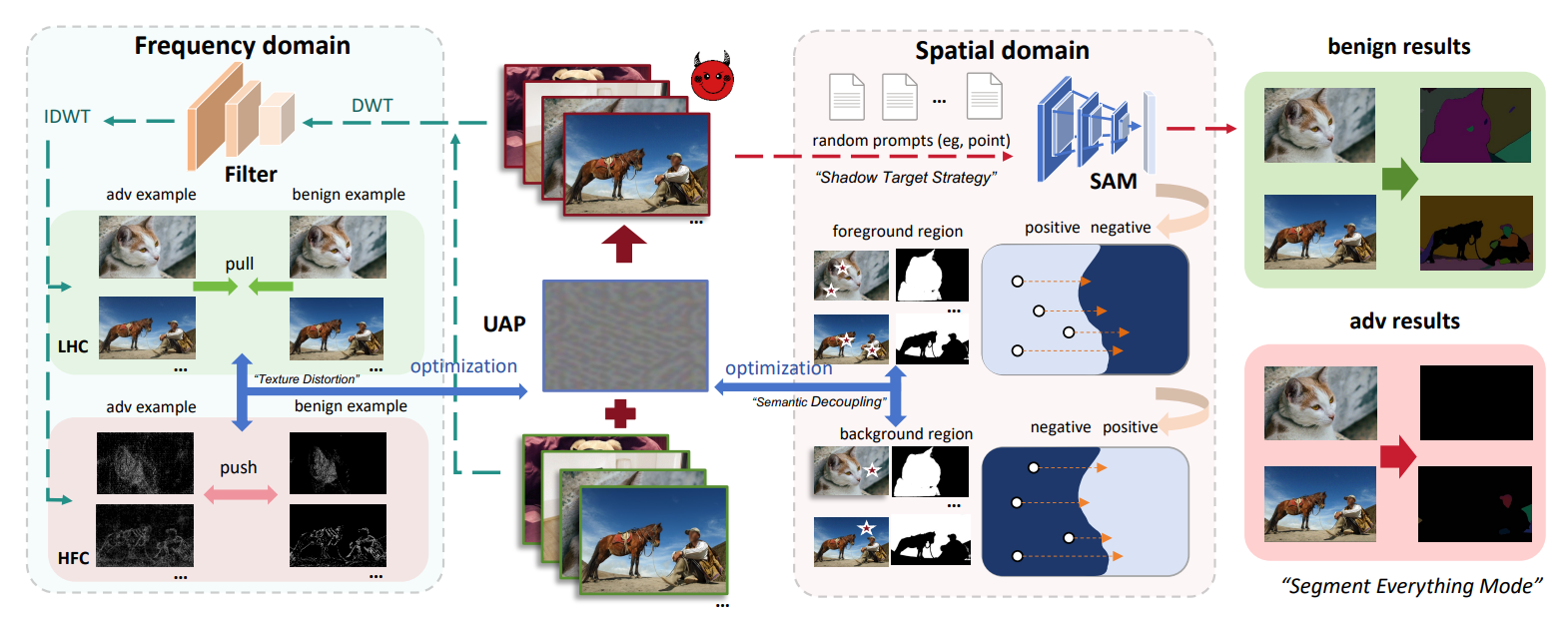

DarkSAM: Fooling Segment Anything Model to Segment Nothing

NeurIPS 2024

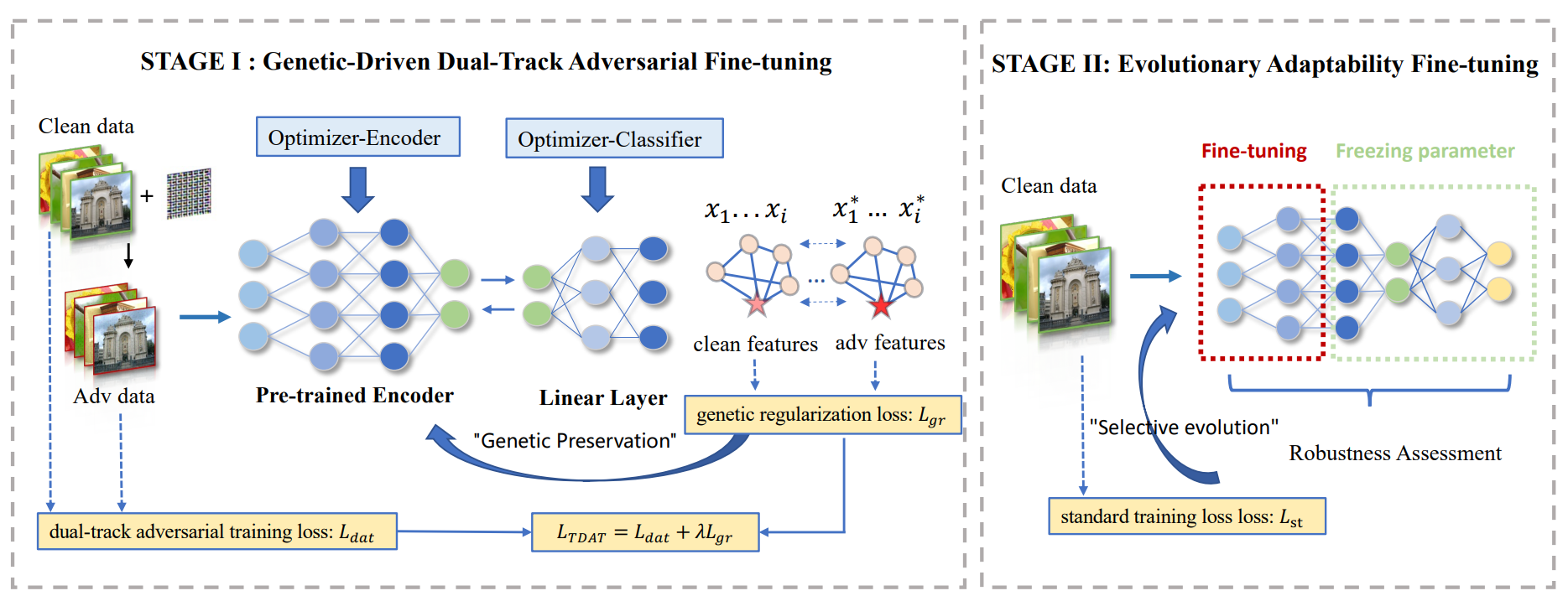

Securely Fine-tuning Pre-trained Encoders Against Adversarial Examples

IEEE S&P 2024

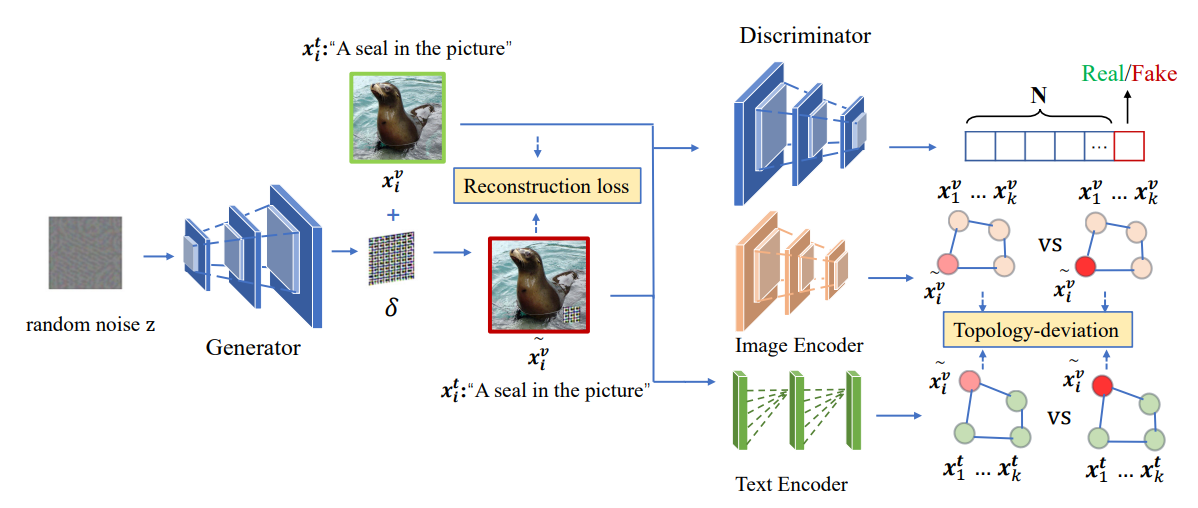

AdvCLIP: Downstream-agnostic Adversarial Examples in Multimodal Contrastive Learning

ACM MM 2023

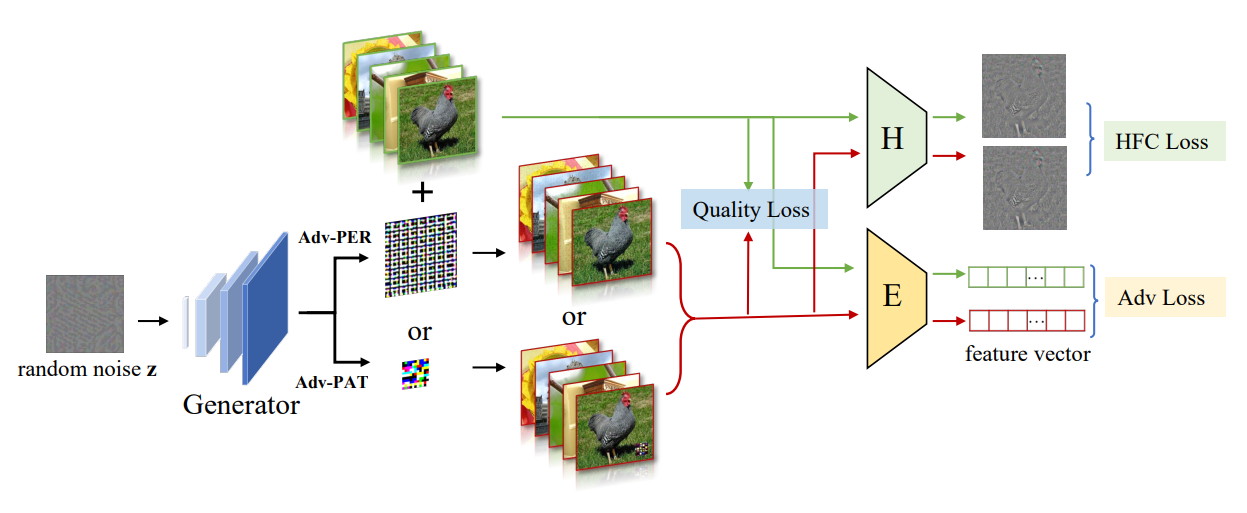

Downstream-agnostic Adversarial Examples

ICCV 2023

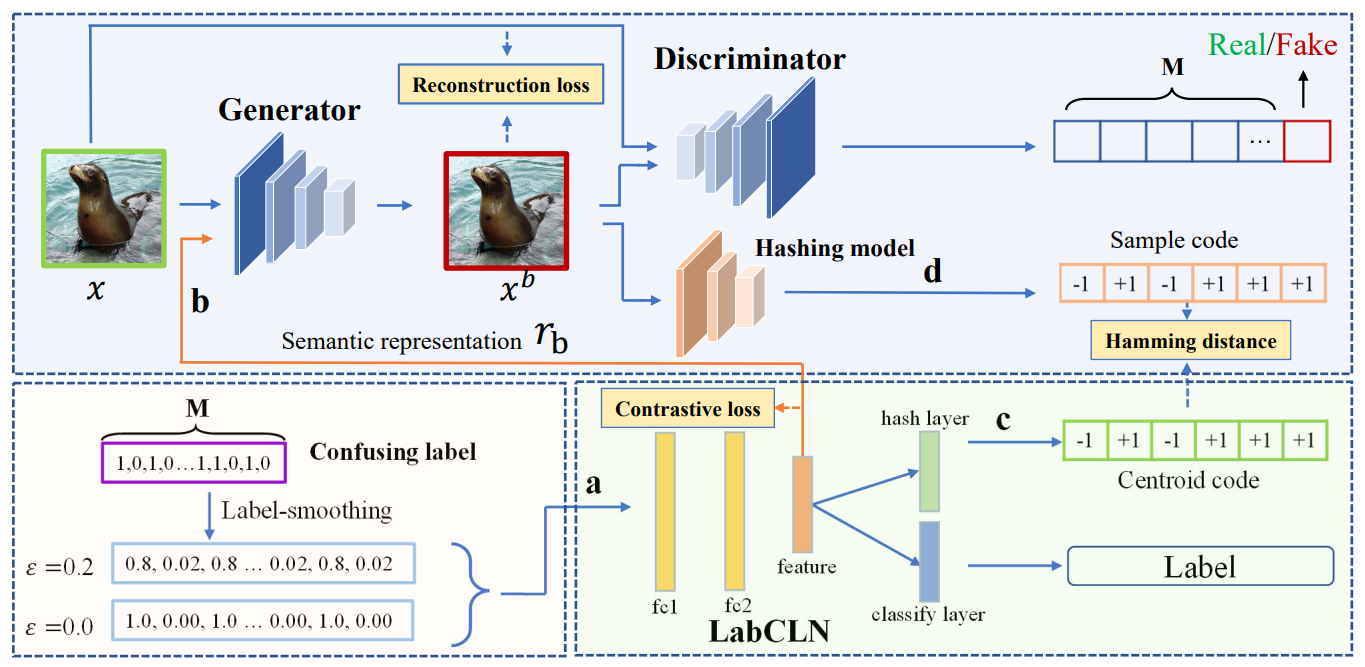

BadHash: Invisible Backdoor Attacks against Deep Hashing with Clean Label

ACM MM 2022

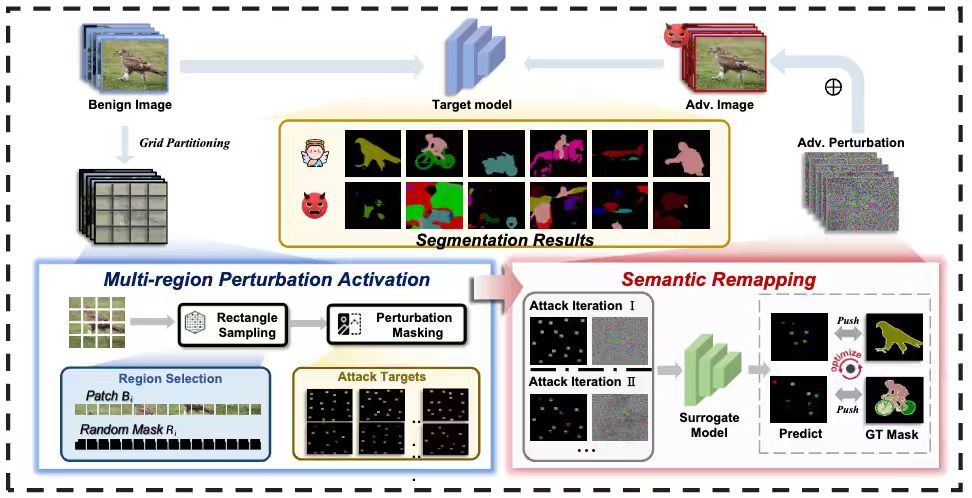

SegTrans: Transferable Adversarial Examples for Segmentation Models

TMM 2025

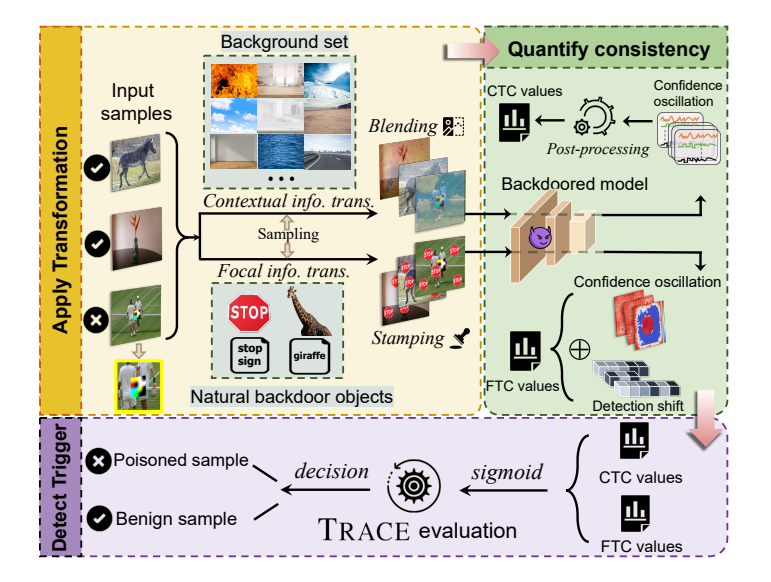

Test-Time Backdoor Detection for Object Detection Models

CVPR 2025

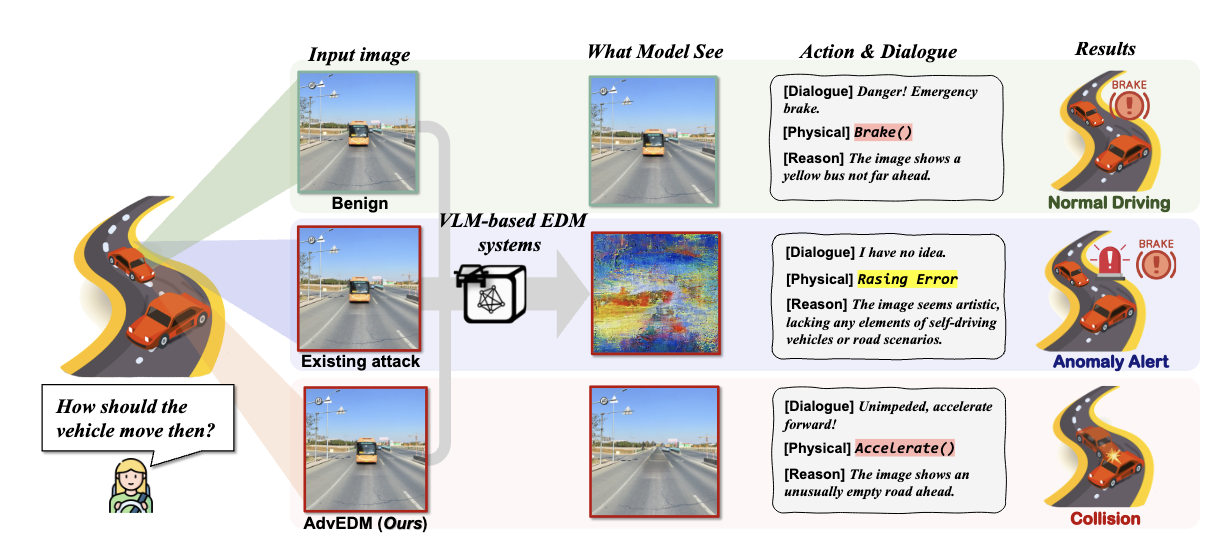

AdvEDM: Fine-grained Adversarial Attack against VLM-based Embodied Decision-Making Systems

NeurIPS 2025

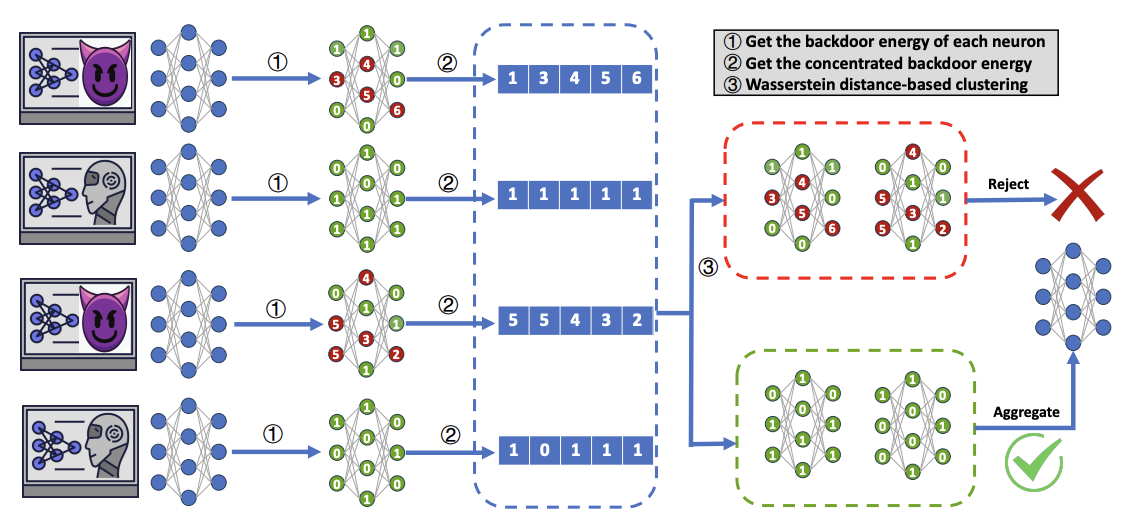

MARS: A Malignity-Aware Backdoor Defense in Federated Learning

NeurIPS 2025

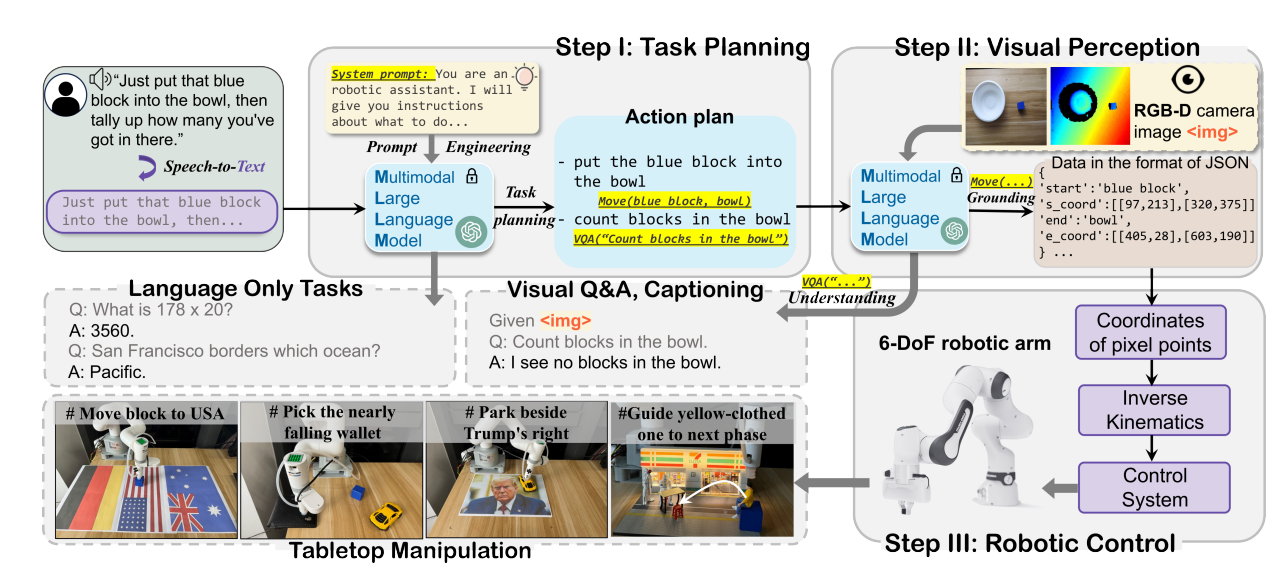

BadRobot: Manipulating Embodied LLMs in the Physical World

ICLR 2025

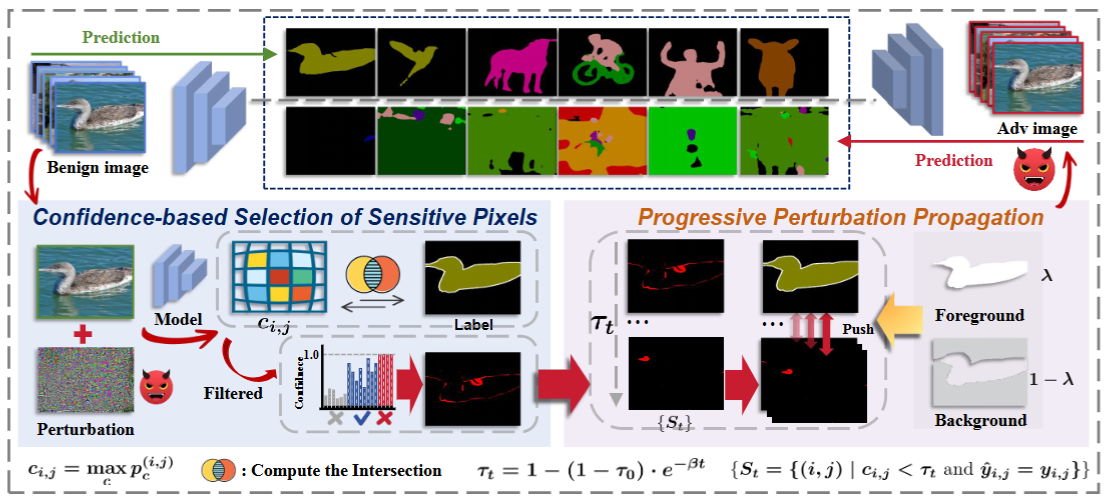

Erosion Attack for Adversarial Training to Enhance Semantic Segmentation Robustness

ICASSP 2026

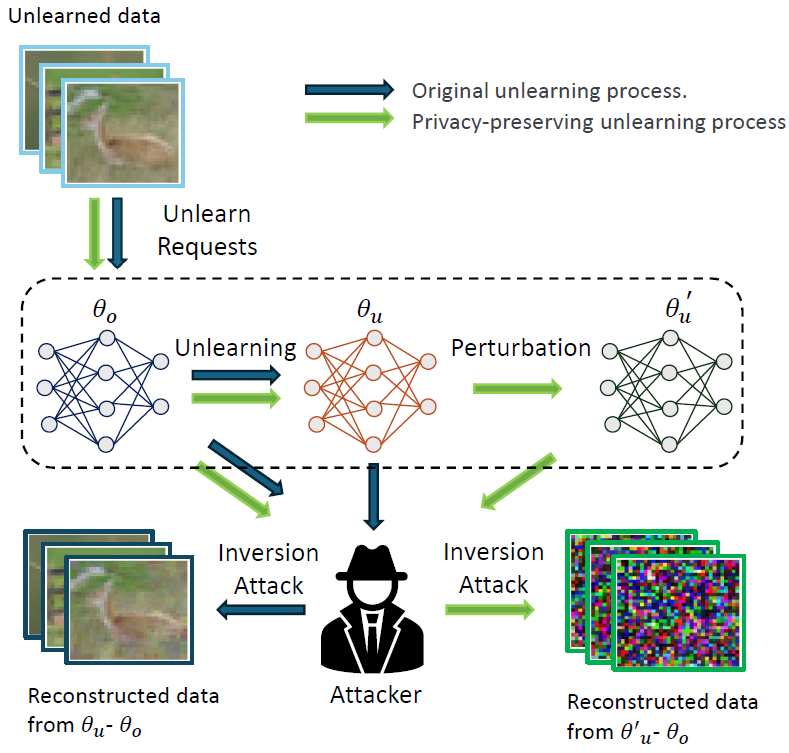

UnlearnShield: Shielding Forgotten Privacy against Unlearning Inversion

ICASSP 2026

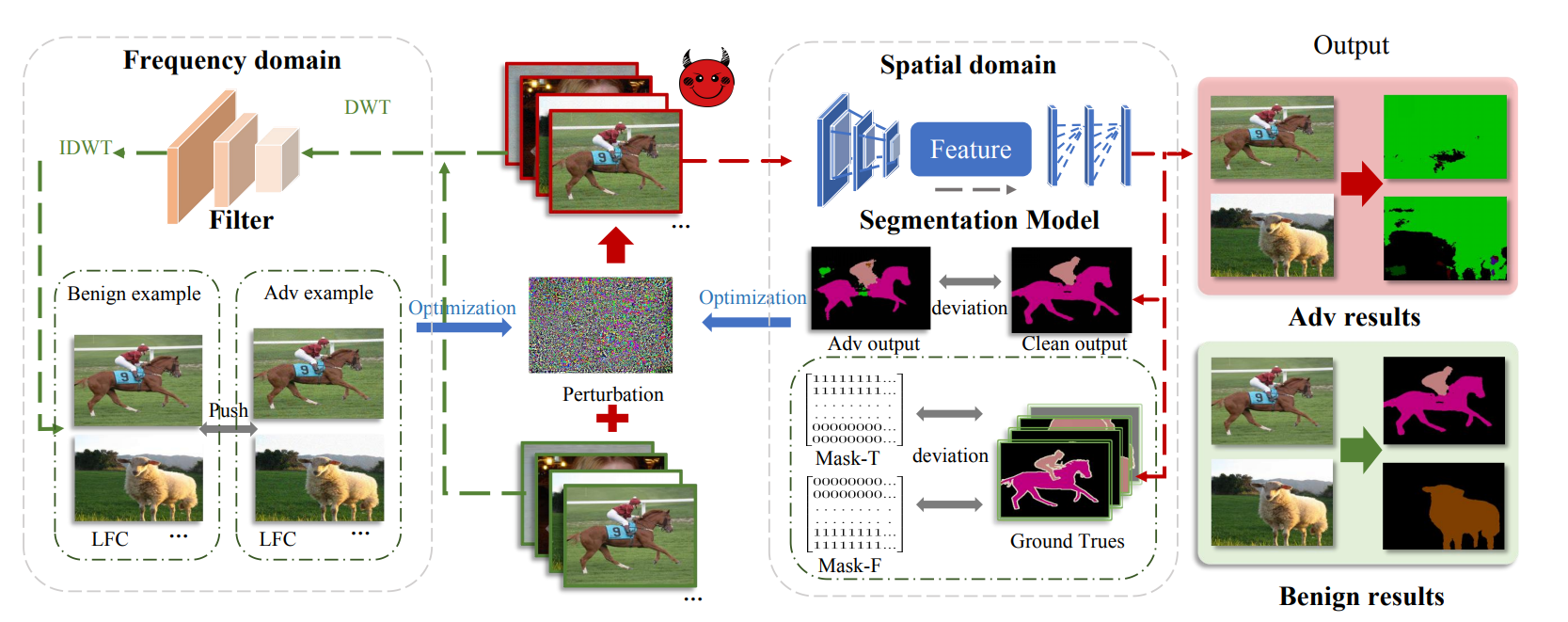

PB-UAP: Hybrid Universal Adversarial Attack For Image Segmentation

ICASSP 2025

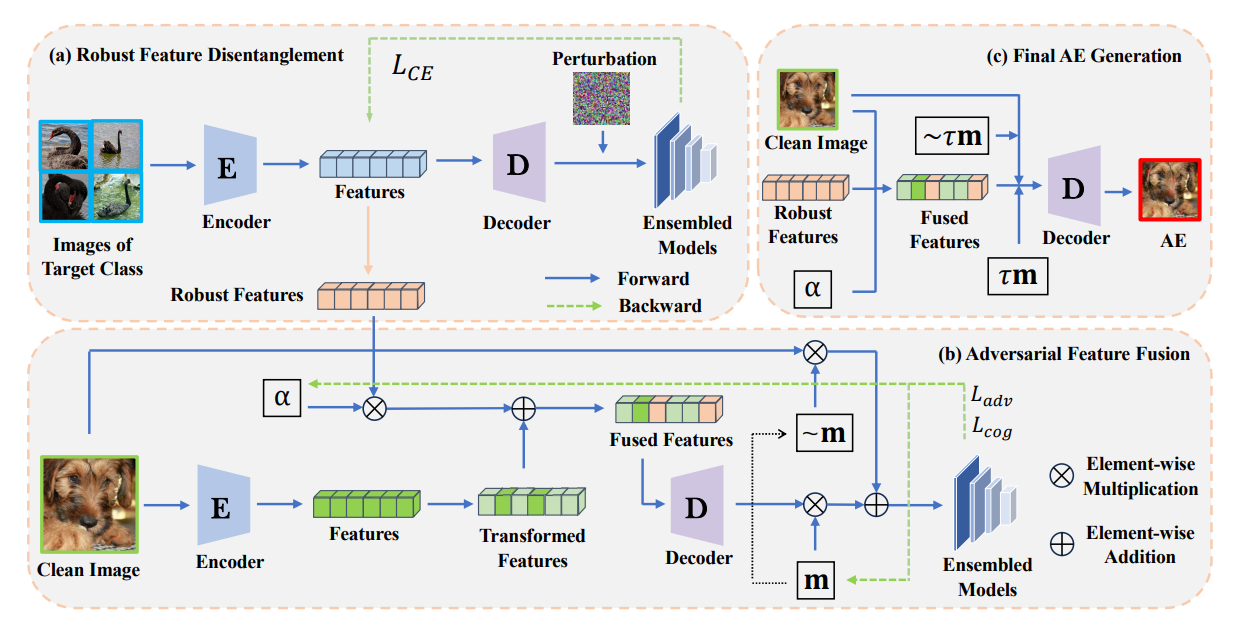

Breaking Barriers in Physical-World Adversarial Examples: Improving Robustness and Transferability via Robust Feature

AAAI 2025 (Oral)

Detecting and Corrupting Convolution-based Unlearnable Examples

AAAI 2025

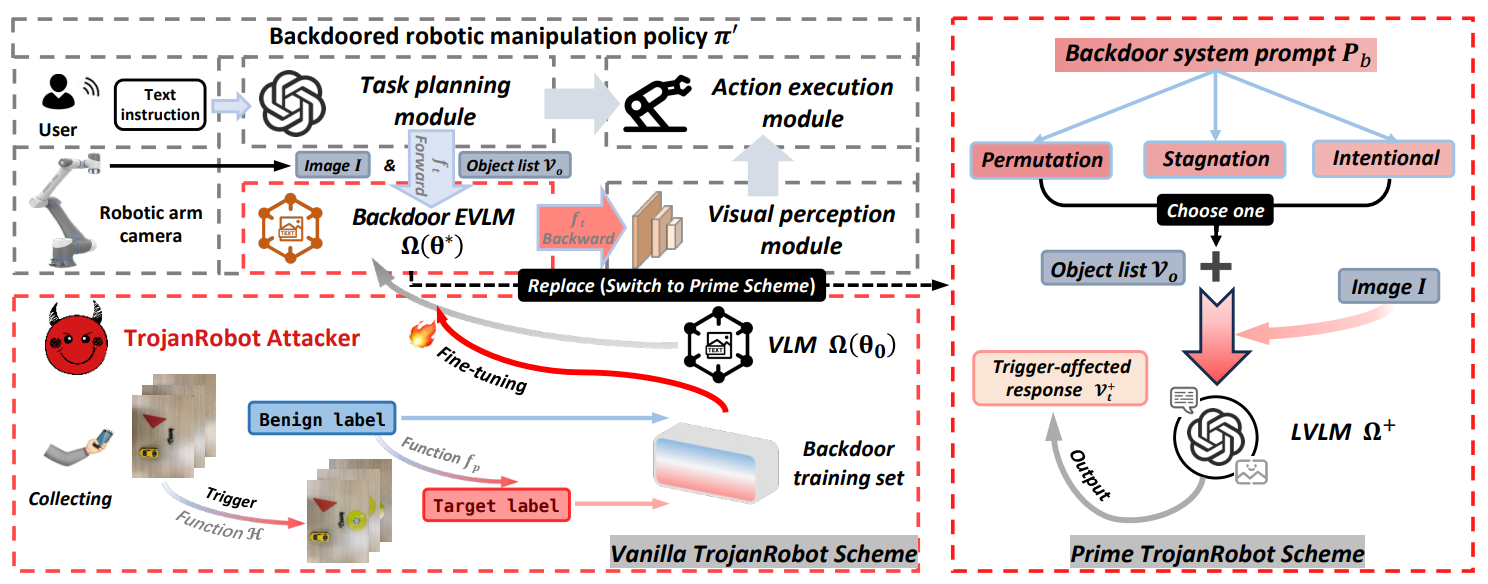

TrojanRobot: Backdoor Attacks Against Robotic Manipulation in the Physical World

arXiv 2024

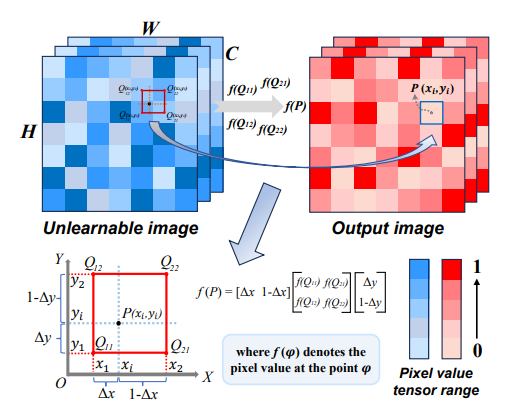

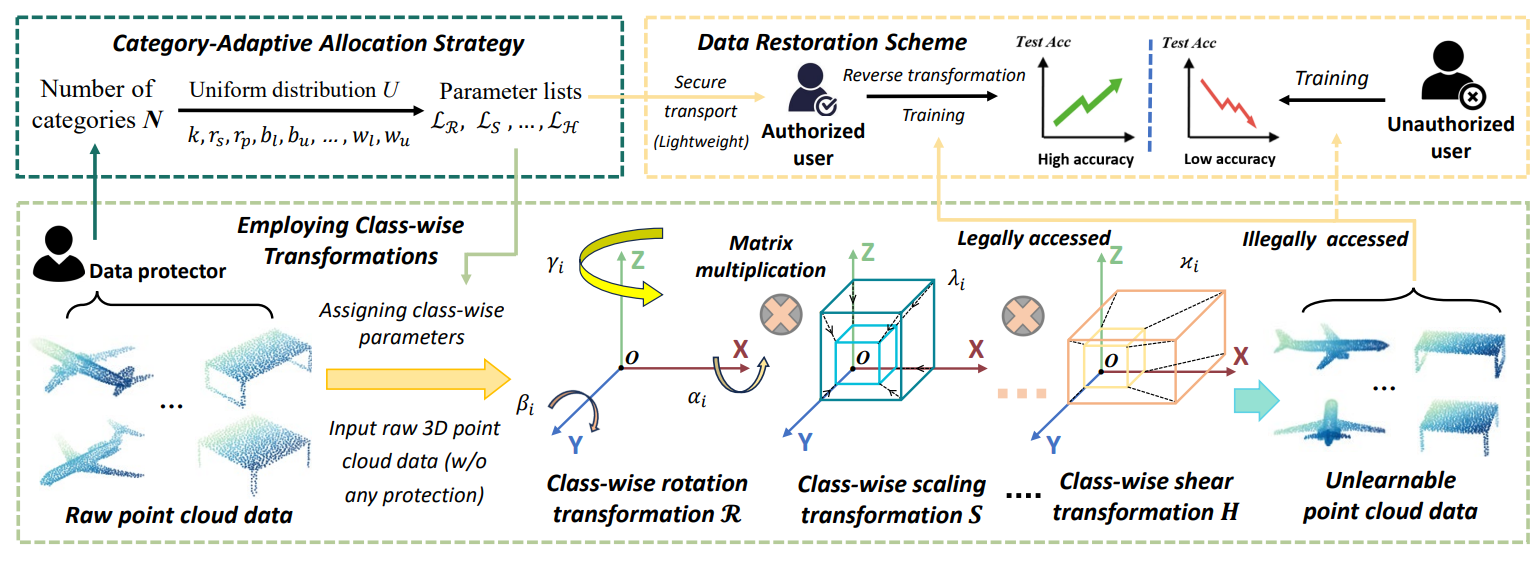

Class-wise Transformation Is All You Need

NeurIPS 2024

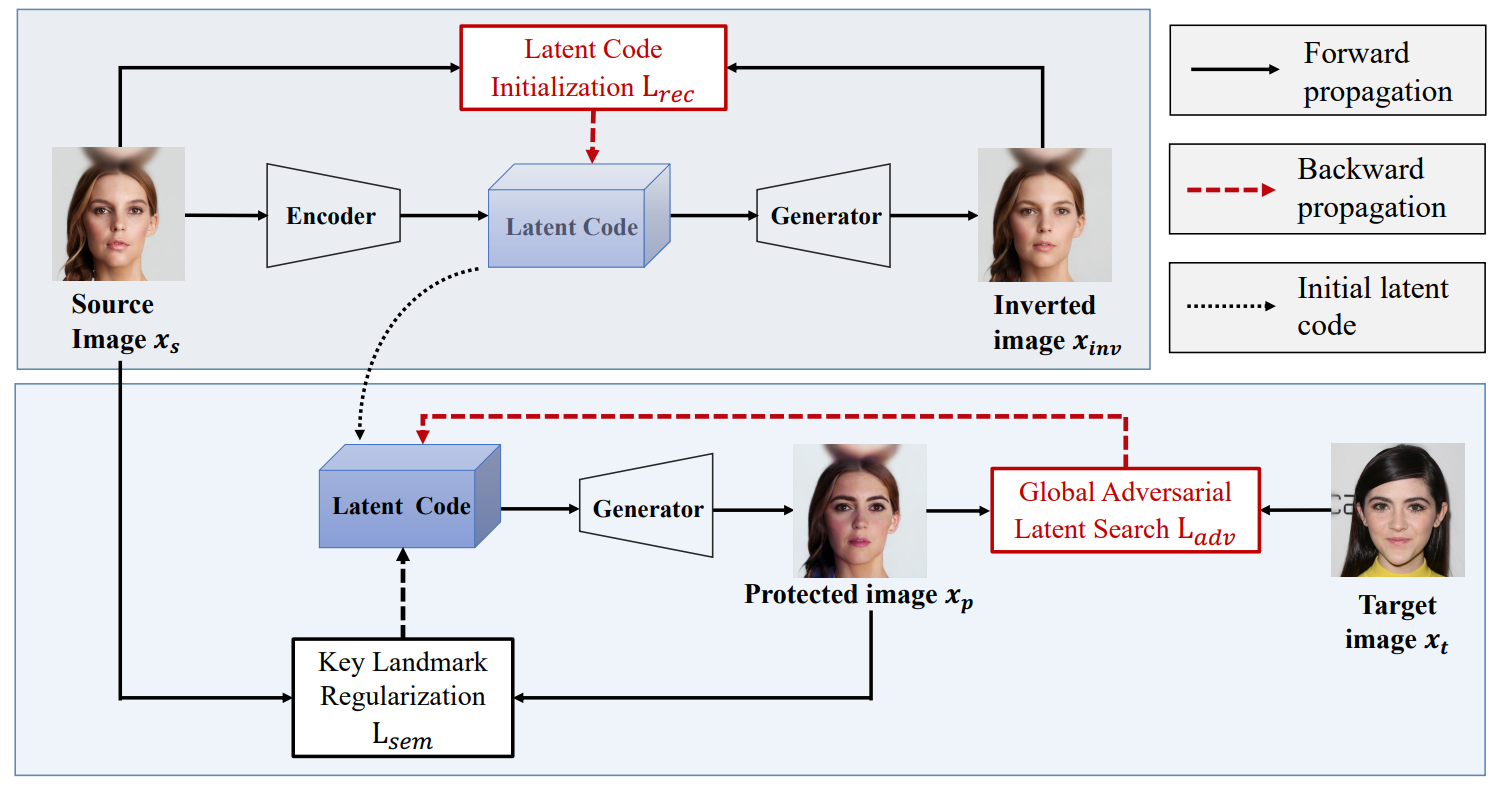

Transferable Adversarial Facial Images for Privacy Protection

ACM MM 2024

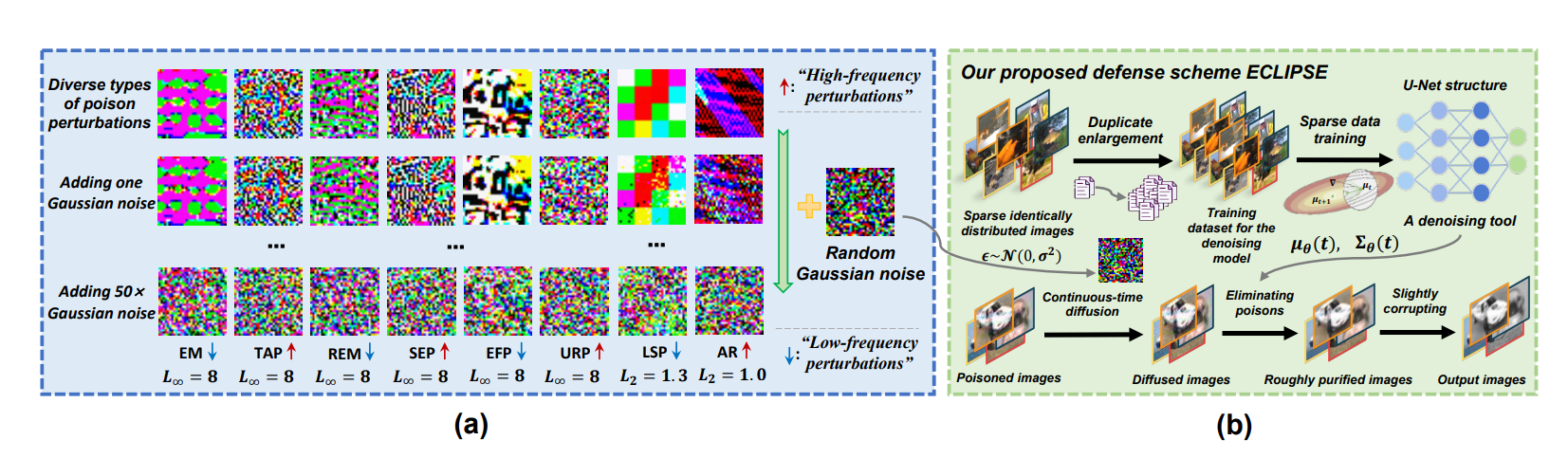

ECLIPSE: Expunging Clean-label Indiscriminate Poisons via Sparse Diffusion Purification

ESORICS 2024

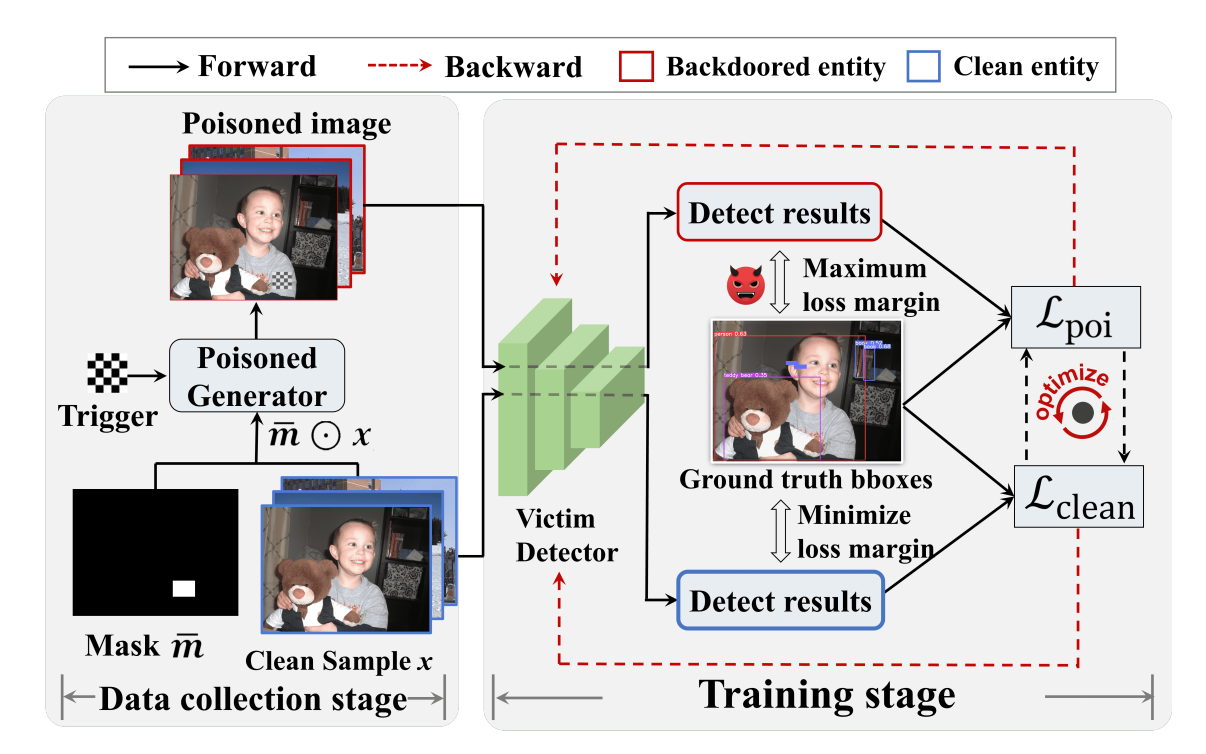

Detector Collapse: Backdooring Object Detection to Catastrophic Overload or Blindness

IJCAI 2024

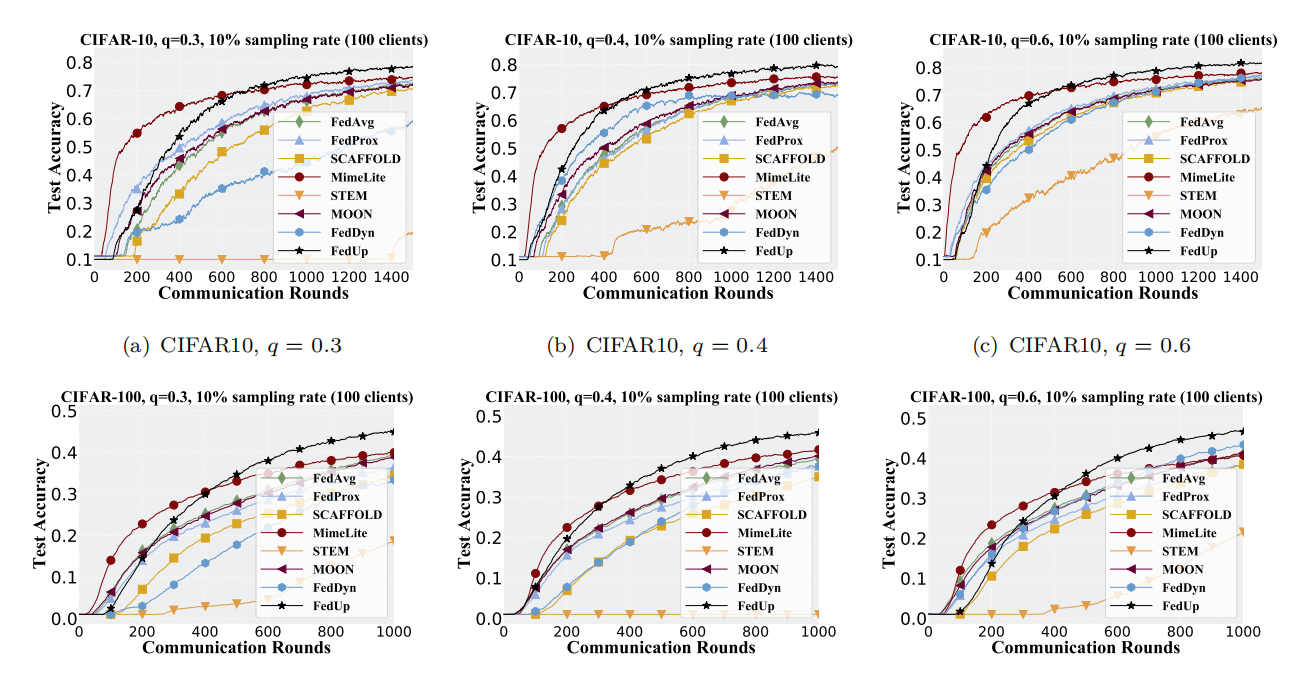

Generalisation Robustness Enhancement for Federal Learning in Highly Data Heterogeneous Scenarios

SCIENTIA SINICA Informationis 2023

Selected Honors & Awards

- [2025] National Scholarship for Phd Students.

- [2024] National Scholarship for Phd Students.

- [2024] Best Paper Award at the Academic Conference of the School of Computer Science and Technology.

- [2023] Outstanding Graduate Student Communist Party Member Model Award.

- [2022] National Artificial Intelligence Security Competition, Excellence Award.

- [2022] National Scholarship for Graduate Students.

- [2022] Outstanding Graduate Student Award.

- [2022] AAAI 2022 Data-Centric Robust Learning on ML Models, Twelfth Place Award.

- [2021] Outstanding Student Award.

- [2021] Second-Rank Academic Scholarship.

- [2021] Research and Innovation Scholarship.

- [2020] First-Rank Outstanding Student Scholarship.

Top